Data Engineering

Building the Foundation for Scalable, Intelligent, and Data-Driven Systems

At SparkBrains, we design and implement robust data engineering solutions that transform raw, scattered data into structured, high-quality assets ready for advanced analytics, machine learning, and business intelligence. Our expertise spans cloud-native platforms like AWS, Azure, and GCP, as well as modern data technologies including Snowflake, Databricks, Apache Airflow, Kafka, Spark, and more. We build real-time and batch processing pipelines, architect lakehouses and data warehouses, and ensure seamless integration across APIs, legacy systems, IoT streams, and third-party platforms. From ingestion and transformation to orchestration, governance, and quality control, we deliver scalable, secure, and future-ready data infrastructure tailored to your business goals. Whether you're modernizing legacy systems or building a new data platform from scratch, we help you unlock the full potential of your data.

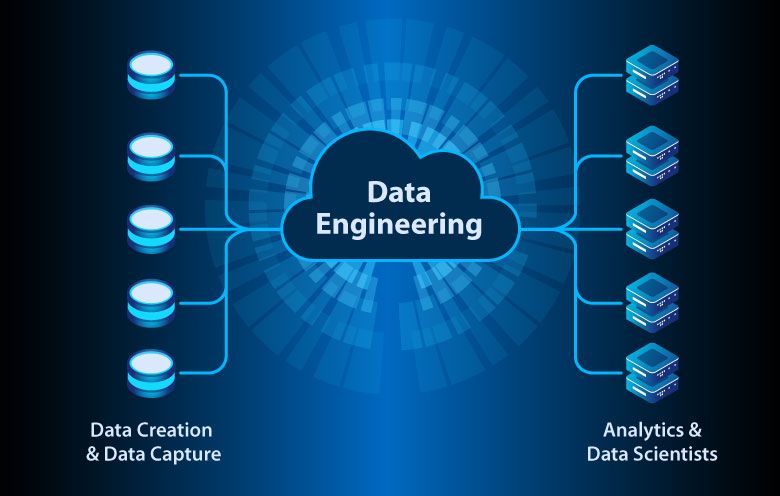

Data Engineering for Effective Data Management

At SparkBrains, our data engineers play a pivotal role in shaping the backbone of your data-driven organization. We build end-to-end data ecosystems that ensure the accurate collection, secure storage, efficient transformation, and seamless flow of data across your business landscape. From architecting scalable data lakes and warehouses to automating complex ETL/ELT pipelines, we enable unified access to high-quality, analytics-ready data. Our solutions are designed to meet modern demands—whether it's handling real-time streaming data, integrating diverse data sources, or implementing governance frameworks for compliance and transparency. With SparkBrains, your data becomes more than just an asset—it becomes a strategic advantage.

Key Features Of Data Engineer

Scalable Data Pipelines

We design real-time and batch pipelines that handle large volumes of data efficiently, ensuring fast and reliable data movement across systems.

Cloud & Hybrid Architecture

Our solutions are built to leverage the best of cloud platforms like AWS, Azure, and GCP, while supporting on-premise and hybrid environments.

Data Quality & Governance

We implement robust validation, lineage tracking, and governance frameworks to ensure your data is clean, compliant, and trustworthy.

Integration-Ready Systems

Connect seamlessly with APIs, third-party platforms, IoT devices, and legacy databases to unify your data ecosystem for analytics and AI.

Tailored Data Engineering

Solutions

We design custom-built data solutions aligned with your specific business goals, tech stack, and industry requirements—no one-size-fits-all approach here.

- Industry-Specific Architectures

- Custom ETL/ELT Pipelines

- Flexible Deployment Models

- Modular & Scalable Design

Essential Tools and Technologies for Effective Data Engineering

We leverage a wide array of modern tools and technologies to build high-performance, scalable, and reliable data systems tailored to your needs.

Apache Airflow

Open-source workflow management platform used to schedule, orchestrate, and monitor complex data pipelines.

Apache Kafka

Distributed event streaming platform ideal for building real-time data pipelines and stream processing applications.

Spark & PySpark

Powerful engines for large-scale data processing, machine learning, and ETL on massive datasets.

Snowflake

Cloud-native data warehouse offering high performance, scalability, and secure data sharing across platforms.

Databricks

Unified analytics platform combining data engineering, machine learning, and collaborative notebooks on top of Apache Spark.

AWS / Azure / GCP

Cloud platforms that offer robust services for data storage, processing, analytics, and serverless workflows.

DBT (Data Build Tool)

Tool for transforming data in the warehouse with version control, testing, and modular SQL-based workflows.

Fivetran / Talend / Informatica

ETL/ELT integration tools that automate data ingestion from diverse sources into centralized systems.

SQL & NoSQL Databases

Core data storage systems (like PostgreSQL, MySQL, MongoDB, Cassandra) used for structured and unstructured data.